No matter where one looks nowadays, there is an LLM 'prompt for that' no matter what 'that' is. This is certainly the case in education. No matter what you need to get done, the most pro-active advice is to prompt away in your chosen LLM and tag the job as done.

Google have released Gemini for Education, Open AI and GPT are going crazy with partnerships in education, Magic School and many others have been window dressing GPT for years now. What is being achieved in the classroom with all of this LLM air time you ask? More content is the answer and it makes sense. LLMs are made to produce and synthesise content from their enormous belly of ingested material.

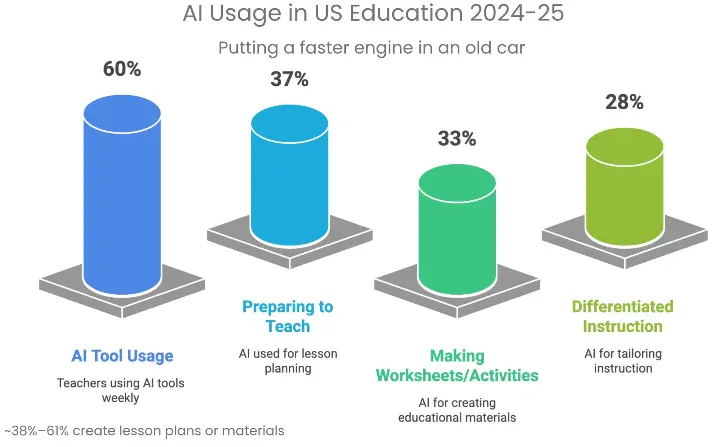

A recent Gallop Poll in the US shows that 60% of teachers use AI weekly to create lesson plans and materials. Creating more learning and assessment content using LLMs does not fundamentally address the context needs of students, base pedagogical principles nor an alignment to standards and curriculums. It sure does however feel good to have all that 'stuff' written for you at warp speed.

We suggest there are five key concerns with LLM-created content for the assessment of, as and for learning.

1. Quality & Accuracy Risks

- Hallucinations: LLMs can generate plausible but incorrect questions, answers, or explanations.

- Misalignment: Content may not match the syllabus, exam formats, or local learning objectives.

- Inconsistency: The same prompt can produce different quality output every time — hard to guarantee reliability.

2. Pedagogical Weakness

- No developmental progression: LLMs don’t naturally scaffold questions in the right order of difficulty.

- Shallow alignment: Questions and tasks may look good on the surface but fail to build the underlying cognitive skills.

- Lack of evidence-based design: Unlike publisher materials, LLM-generated worksheets aren’t tested or validated with students.

3. Equity & Trust Concerns

- Uneven teacher skill: Some teachers can craft strong prompts; others struggle, leading to inconsistent outcomes across classrooms.

- Trust gap with parents: Parents may doubt the credibility of “AI-generated” worksheets compared to recognised publishers.

- Equity gap: Students may be exposed to uneven quality depending on how well their teacher uses the tool.

4. Hidden Workload Increase

- Verification needed: Teachers must check every question and answer to ensure accuracy — which can take more time than just creating materials themselves.

- Formatting issues: LLMs don’t always output clean, classroom-ready worksheets, requiring editing, layout, and polish.

- Reinvention of the wheel: Teachers spend time generating “new” content that may duplicate existing high-quality workbook materials.

5. Student Impact

- Confusion risk: Poorly phrased or incorrect AI-generated questions can reinforce misconceptions.

- Lack of feedback loop: Worksheets from LLMs are static unless paired with marking/feedback systems, leaving teachers with more grading to do.

Bottom line for teachers:

Using LLMs to create worksheets can feel like a shortcut, but it often creates hidden costs in accuracy-checking, quality assurance, and alignment. It risks undermining trust and consistency.